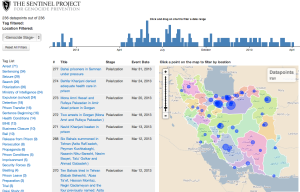

I’ve recently became involved with XSCE (School Server Community Edition) on their “Internet in a box” project to allow OpenStreetMap (OSM) maps to be available offline. Some of their deployments in remote schools around the world do not have a consistent internet access. So the idea is to download and store a set of knowledge resources (Wikipedia, videos from Khan Academy, OSM maps, etc) on a server, which will then provide those resources while being offline to laptops connected on the internal network.

Here are the constraints that need to be considered

- The laptops that will be visualizing the maps are very underpowered. They are often XO laptops from the One Laptop per Child OLPC project.

- The server, while not being as underpowered as the laptop, are typically quite limited as well on the HD, RAM and CPU.

- Server handle other tasks than providing maps so this can’t be using entirely the hardware available

- Server specs are not consistent from a deployment to the other (but they do have in common that they must run the XSCE software)

- Deployments’ needs are rarely the same, they can be in any region of the world and each of them might not want the same level of map details for the same countries

- Server is typically configured by a volunteer that has internet access, before it is deployed in remote locations. While they do have IT knowledge, this need to be simple enough.

- Map does not need to be updated every week, but it needs to be relatively recent. If the server gets internet access once in a while, it needs to be able to update the maps relatively easily

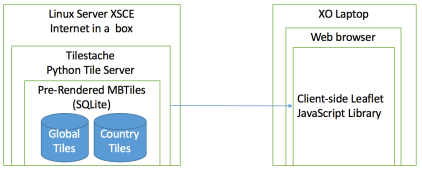

The solution chosen is shown on that architecture diagram.

Since the server specs are limited, the map tiles needs to be pre-rendered before they make it to the XSCE internet in a box server. They cannot be rendered on the fly from the native OSM solution which uses a PostgreSQL database with PostGIS because it requires too much resources and would require to provision a different database for each deployment.

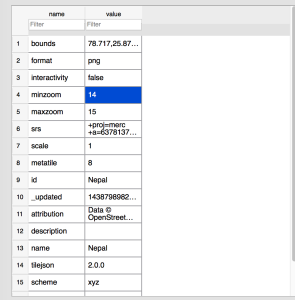

The pre-rendered tiles are stored into a MBTiles file, which is a format created by Mapbox that allows to stores efficiently millions of tiles in a SQLite database (which is then stored in a single file). It is efficient because it avoids duplicate tiles, which is frequent with large area of water. This also simplifies deployment because all you have to do is to move few files around instead of potentially copying millions of PNG tiles stored directly on disk.

To allow saving previous HD disk space, there will be a global planet OSM MBtiles (that does not zoom above level 10, which only zoom up to the city level) and then each country will be available for download as a separate pre-rendered MBTiles file (for zoom level 11 to 15). So for example, if the deployment is in Nepal, they could decide to download on the server the planet MBtiles file to get the map of the whole world, and then only specifically download the higher-zoom file for Nepal, to allow to zoom up to the street level. Downloading the whole world at zoom level up to 15 would require way above 1TB of HD space, which we can’t handle. This is why we want to get a high zoom level only for the countries that are needed by the deployment and based on how much HD space they have to spare.

To serve the MBTiles on a web server, there are a few options like TileStream (node.js) and TileStache (python). I chose TileStache, because it supports composite layers, which allows to serve multiple MBtiles file at the same time. TileStream only supports serving one MBTiles at a time, which would require to merge multiple MBtiles together, which is possible, but complicates deployment and makes it harder if we want to add/remove only specific countries later on. TileStache can serve tiles on WSGI, CGI and mod_python with Apache. XSCE also happens to already run multiple tools with Python and use WSGI with another tool, so the integration was easier (click here for details on the integration).

Then all you need is a simple HTML page, that will load Leaflet as a client side javascript library and will be configured to query the Tilestache tile server located on the local network.

This solution is entirely based on raster tiles, instead of vector tiles. While vector tiles offers significant savings in terms of disk usage, they require much more CPU usage to render on the frontend and newer browsers, which is impossible with the type of hardware that we have (XO laptops).

The big remaining question is, where are those tiles being rendered, where are they stored and how can they be downloaded on demand by the XSCE server? This is a topic for a further blog post!